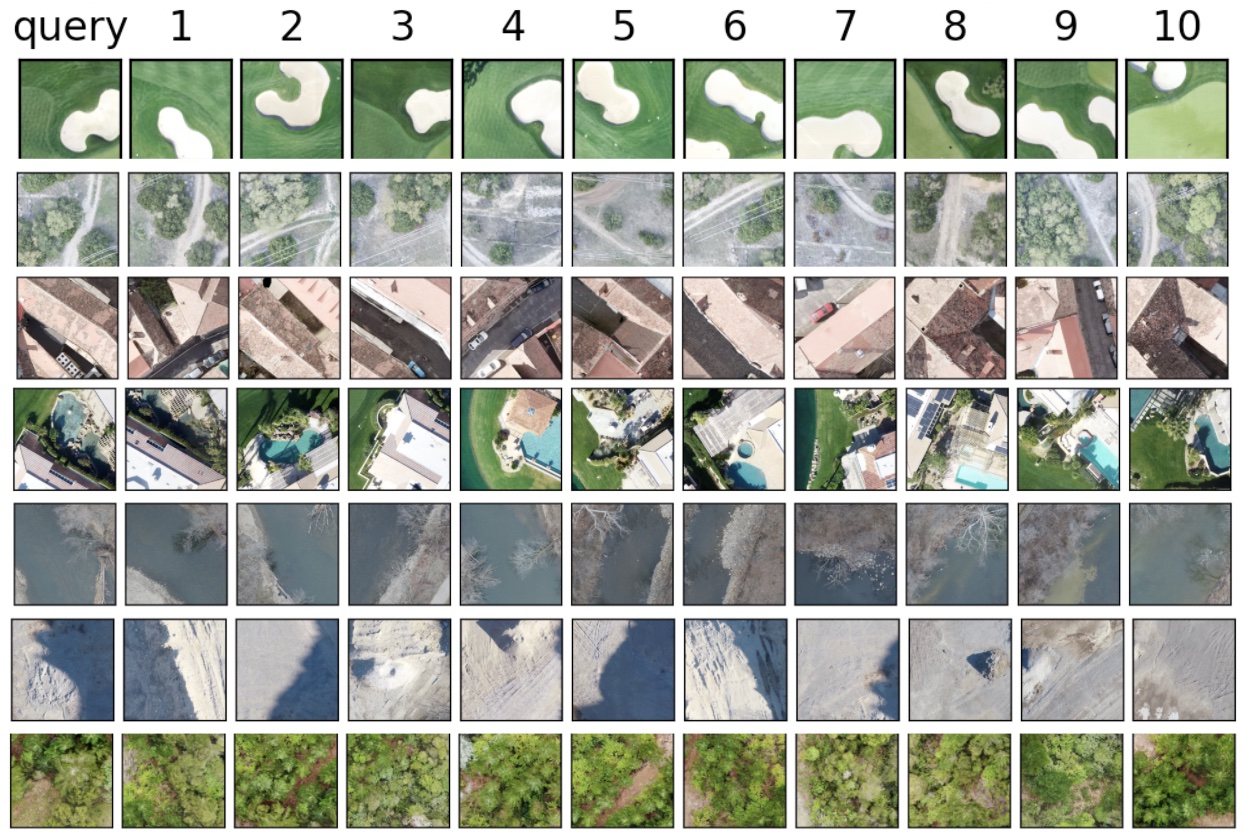

The image database used for this project was a collection of high-resolution drone images from the drone deploy git repo. The database contains 60 .TIF images of neighborhoods, natural scenes, and cities with a resolution of 10cm per pixel. When pixel values in the .TIFs are converted to 32-bit floats, the dataset has a total size of 70 GB.

These high-resolution drone images are then cut into smaller tiles, each of size 256 x 256 pixels. The tiling is acheived with a 128 pixel sliding window, so that image motifs are likely to be well centered in at least one tile. Tiles with >50% null pixel values are removed from the dataset.

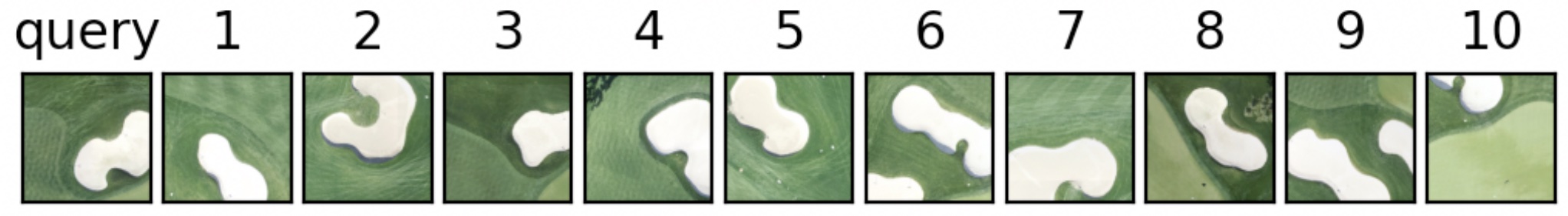

A small portion of an example .TIF image is shown the the right. The original image is 680 MB in size, and was processed into 3,836 tiles.