More info coming soon.

Content-Based Music Recommendation

Overview

This project is still a work in progress! Check the code link below for the latest updates.

Recently, major music streaming services like apple music and spotify have allowed you to download your music listening history and poke through it. I decided to take all 108,000 song streams I've accumulated over 4 years and put them to use training a music recommendation model. Recommender systems are either content-based (in this case, meaning the system looks at the actual audio you've listened to), or collaborative (the system looks at other users' music tastes that partially align with yours), and because I don't have access to many other people's listening history, I went with a content-based approach.

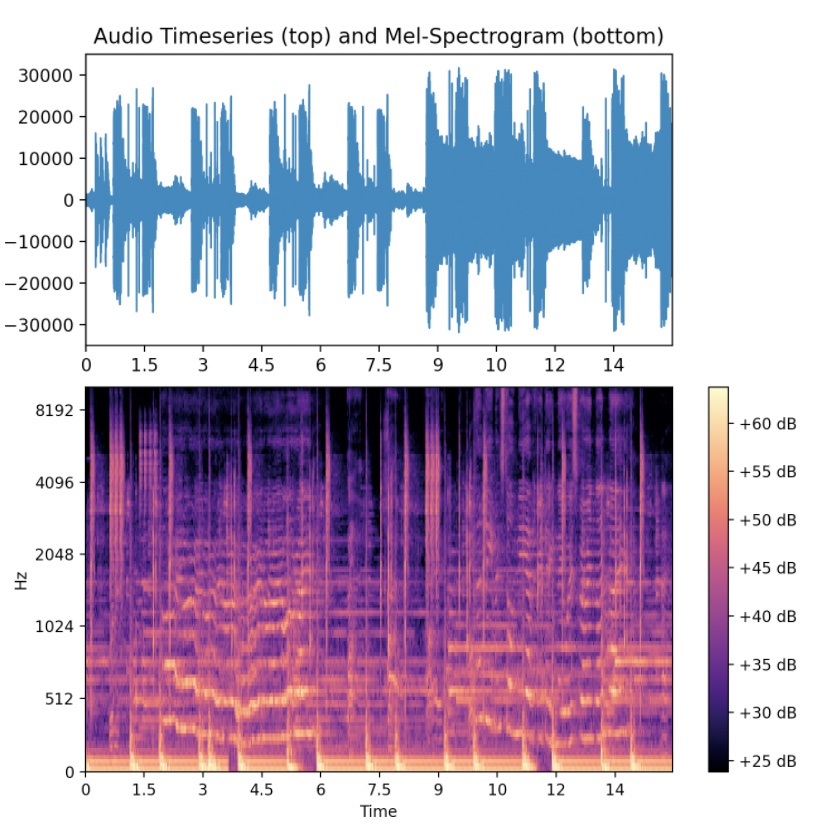

I started by defining a likability metric for every song I had ever listened to, which ended up being a normalized measure of how many total minutes I streamed that song. I then wrote a script to download the .mp3s of all these songs, and convert the raw sound into a mel spectrogram, which turns an audio time-series into a frequency space that is scaled to better represent human sound perception. The mel spectrograms are the model input, and the likeability metric is the model output; the model itself is a convolutional neural network implemented in PyTorch. Everything described here has already been implemented in the code found below, and the next to-do's are to write the training loop, train the model, and analyze the eccentricities in the weights it has learned!

Methods

Data Collection and Preprocessing

Training the Model

More info coming soon.

Results

I don't have many results to share now, but please do check back later.

Code

Please find the link to the code below. So far, I have finished writing the song likeability and spectrogram feature extraction, the youtube mp3 downloader, and the PyTorch model.py. More should be coming soon!

Code on Github